As a package developer, there are quite a few things you need to stay on top on. Following bug reports and feature requests of course, but also regularly testing your package, ensuring the docs are up-to-date and typo-free… Some of these things you might do without even pausing to think, whereas for others you might need more reminders. Some of these things you might be ok doing locally, whereas for others you might want to use some cloud services. In this blog post, we shall explore a few tools making your workflow smoother.

What automatic tools?

I’ve actually covered this topic a while back on my personal blog but here is an updated list.

Tools for assessing

-

R CMD check or

devtools::check()will check your package for adherence to some standards (e.g., what folders can there be) and run the tests and examples. It’s a useful command to run even if your package isn’t intended to go on CRAN. -

For Bioconductor developers, there is

BiocCheckthat “encapsulates Bioconductor package guidelines and best practices, analyzing packages”. -

goodpracticeandlintrboth provide you with useful static analyses of your package. -

covr::package_coverage()calculates test coverage for your package. Having a good coverage also meansR CMD checkis more informative, since it means it’s testing your code. 😉covr::package_coverage()can also provide you with the code coverage of the vignettes and examples! -

devtools::spell_check(), wrappingspelling::spell_check_package(), runs a spell check on your package and lets you store white-listed words.

Tools for improving

That’s all good, but now, do we really have to improve the package by hand based on all these metrics and flags? Partly yes, partly no.

-

stylercan help you re-style your code. Of course, you should check the changes before putting them in your production codebase. It’s better paired with version control. -

Using

roxygen2is generally handy, starting with your no longer needing to edit the NAMESPACE by hand. If your package doesn’t useroxygen2yet, you could useRd2roxygento convert the documentation. -

One could argue that using

pkgdownis a way to improve your R package documentation for very little effort. If you only tweak one thing, please introduce grouping in the reference page. -

Even when having to write some things by hand like inventing new tests,

usethisprovides useful functions to help (e.g., create test files withusethis::use_test()).

When and where to use the tools?

Now, knowing about useful tools for assessing and improving your package is good, but when and where do you use them?

Continuous integration

How about learning to tame some online services to run commands on your R package at your own will and without too much effort? Apart from the last subsection, this section assumes you are using Git.

Run something every time you make a change

Knowing your package still passes R CMD check, and what the latest value of test coverage is, is important enough for running commands after every change you commit to your codebase. That is the idea behind continuous integration (CI), which has been very well explained by Julia Silge with the particular example of Travis CI.

“The idea behind continuous integration is that CI will automatically run R CMD check (along with your tests, etc.) every time you push a commit to GitHub. You don’t have to remember to do this; CI automatically checks the code after every commit.” Julia Silge

Travis CI used to be very popular for the R crowd but this might be changing, as exemplified by usethis latest release. There are different CI providers with different strengths and weaknesses, and different levels of lock-in to GitHub. 😉

Not only does CI allow to run R CMD check without remembering, it can also help you run R CMD check on operating systems that you don’t have locally!

You might also find the tic package interesting: it defines “CI agnostic workflows”.

The life-hack [in a now deleted tweet] by Julia Silge, “LIFE HACK: My go-to strategy for getting Travis builds to work is snooping on other people’s .travis.yml files.”, applies to other CI providers too!

What you can run on continuous integration, beside R CMD check and covr, includes deploying your pkgdown website.

Run R CMD check regularly

Even in the absence of your changing anything to your codebase, things might break due to changes upstream (in the packages your package depends on, in the online services it wraps…). Therefore it might make sense to schedule a regular run of your CI checking workflow. Many CI services provide that option, see e.g. the docs of Travis CI and GitHub Actions.

As a side note, remember than CRAN packages are checked regularly on several platforms.

Be lazy with continuous integration: PR commands

You can also make the most of services “on the cloud” for not having to run small things locally.

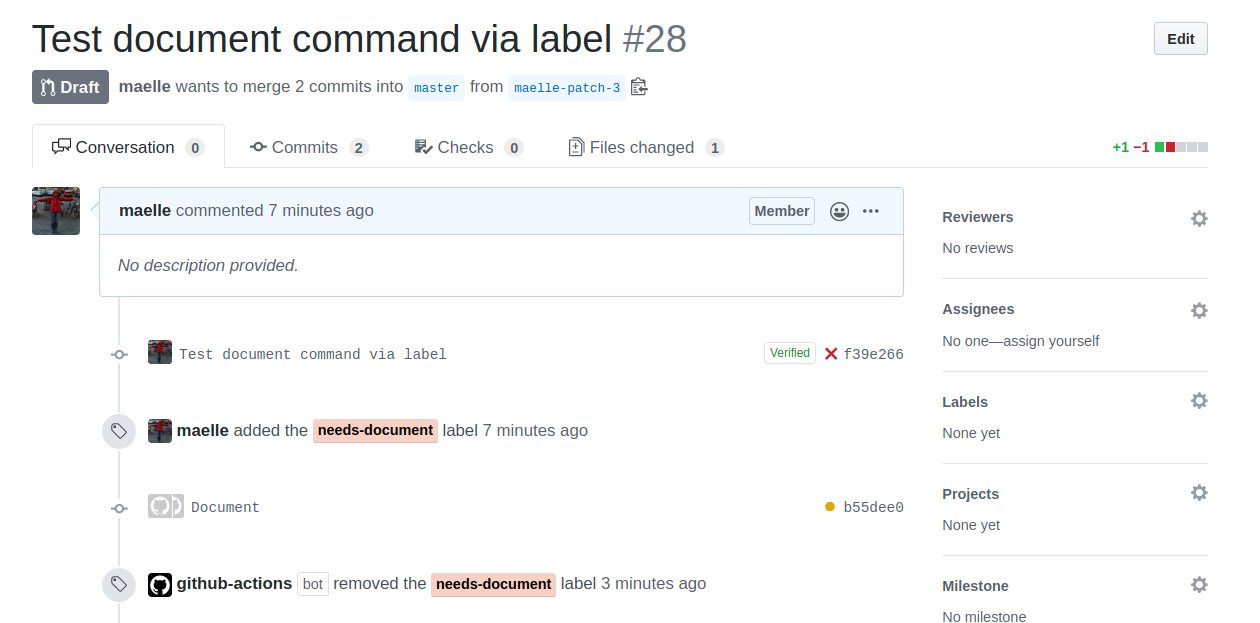

An interesting trick is e.g. the definition of “PR commands” via GitHub Action.

Say someone sends a PR to your repo fixing a typo in the roxygen2 part of an R script, but doesn’t run devtools::document(), or someone quickly edits README.Rmd without knitting it.

You could fetch the PR locally and run respectively devtools::document() and rmarkdown::render() yourself, or you could make GitHub Action bot do that for you!

💃

Refer to the workflows in e.g. ggplot2 repo, triggered by writing a comment such as “/document”, and their variant in pksearch repo, where labeling the PR. Both approaches have their pros and cons. I like labeling because not having to type the command means you can’t make a typo. 😁 Furthermore, it doesn’t clutter the PR conversation, but you can hide comments later on whereas you cannot hide the labeling event from the PR history, so really, to each their own.

This example is specific to GitHub and GitHub Action but you could think of similar ideas for other services.

Run something before you make a change

Let’s build on a meme to explain the idea in this subsection:

- 💤 Tired: Always remember to do things well

- 🔌 Wired: Use continuous integration to notice wrong stuff

- ✨ Inspired: Use precommit to not even commit wrong stuff

Git allows you to define “pre-commit hooks” for not letting you e.g. commit README.Rmd without knitting it.

You might know this if you use usethis::use_readme_rmd() that adds such a hook to your project.

To take things further, the precommit R package provides two sets of utilities around the precommit framework: hooks that are useful for R packages or projects, and usethis-like functionalities to set them up.

Examples of available hooks, some of them possibly editing files, others only assessing them: checking your R code is still parsable, spell check, checking dependencies are listed in DESCRIPTION… Quite useful, if you’re up for adding such checks!

Check things before show time

Remembering to run automatic assessment tools is key, and both continuous integration and pre-commit hooks can help with that.

Now a less regular but very important occurrence is the perfect occasion to run tons of checks: preparing a CRAN release!

You know, the very nice moment when you use rhub::check_for_cran() among other things… What other things by the way?

CRAN has a submission checklist, and you could either roll your own or rely on usethis::use_release_issue() creating a GitHub issue with important items.

If you don’t develop your package on GitHub you could still have a look at the items for inspiration.

The devtools::release() function will ask you whether you ran a spell check.

Conclusion

In this blog post we went over tooling making your package maintainer life easier: R CMD check, lintr, goodpractice, covr, spelling, styler, roxygen2, usethis, pkgdown… and ways to integrate them into your workflow without having to remembering about them: continuous integration services, pre-commit hooks, using a checklist before a release.

Tools for improving your R package will often be quite specific to R in their implementation (but not principles), whereas tools for integrating them into your practice are more general: continuous integration services are used for all sorts of software projects, pre-commit is initially a Python project.

Therefore, there will be tons of resources about that out there, some of them under the umbrella of DevOps.

While introducing some automagic into your workflow might save you time and energy, there is some balance to be found in order not to spend to much time on “meta work”.

:clock:

Furthermore, there are other aspects of package development we have not mentioned for which there might be interesting technical hacks: e.g. how do you follow the bug tracker of your package? How do you subscribe to the changelogs of its upstream dependencies?

Do you have any special trick? Please share in the comments below!